Practice Terraform using Christmas trees

Terraform is a great tool to manage your infrastructure via plain text. It helps detecting what changed and how to bring the infrastructure to the state you want it to be in.

Now Terraform has all the important Cloud services like Azure, AWS etc. as "providers" (adapters) available, but there are also some more obscure ones, like Spotify or Dominos.

To fiddle around a bit with the concepts of Terraform, we'll use the Christmas tree provider!

Getting started with Terraform

At first we'll need a working Terraform installation. Hashicorp has a good installation guide for all the major platforms, I'm going to use Ubuntu here.

After installing the needed dependencies (like gnupg etc.), adding the HashiCorp GPG key and their Debian package repository, we're able to install terraform using

sudo apt install terraformAfter this, we'll see that it was installed:

adrianus@adrianus-ThinkPad:~$ terraform -v

Terraform v1.5.7 on linux_amd64Setting up the provider

With Terraform installed, we can get going and create a new file main.tf, where we can add the provider with its version. We could also configure options for our provider, but christmas-tree doesn't need any ({}).

terraform {

required_providers {

christmas-tree = {

source = "cappyzawa/christmas-tree"

version = "0.6.1"

}

}

}

provider "christmas-tree" {}Now we can initialize Terraform:

$ terraform initThis will setup Terraform for our project, create a .terraform-folder and download the provider for us.

Creating a resource

Now let's create our first Christmas Tree. According to the Documentation, we can define a new "Christmas tree"-resource as follows:

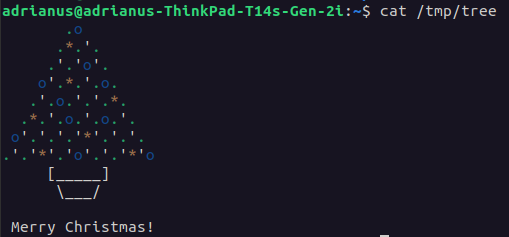

resource "christmas-tree" "my-tree" {

path = "/tmp/tree"

ball_color = "red"

star_color = "yellow"

light_color = "white"

}We can now check what Terraform wants to do, based on this resource:

$ terraform plan

Terraform will perform the following actions:

# christmas-tree.my-tree will be created

+ resource "christmas-tree" "my-tree" {

+ ball_color = "red"

+ id = (known after apply)

+ light_color = "white"

+ path = "/tmp/tree"

+ star_color = "yellow"

}

Plan: 1 to add, 0 to change, 0 to destroy.Nice! It recognizes a new resource and indicates that it wants to create it.

How Terraform works

When working with Terraform, 3 things are important:

- The current reality - your actual (infrastructure) resources

- Terraform's internal state - this is what Terraform thinks the current status of your resources is. It is maintained in the file

terraform.tfstate. - The definition of what your resources should be, kept in

main.tf. This is the file we created and which we're going to change.

Now when running terraform plan, Terraform will first check against what is actually there (1.), so it can then update its internal state (2.). After that, it will check what should be the new state (3.) against the current state (2.) and map out the differences (add, update, replace, delete). It will show these differences then as an output of plan.

After making a literal "plan" of what to do, we can accept if with terraform apply. This will apply all the recognized differences and the planned state (3.) will match both the internal state of Terraform (2.) and your actual resource state (1.)

If we run terraform plan again, but this time with -out tfplan, we can run apply after that to be sure that exactly the changes from before will be applied. This will look like follows:

$ terraform apply "tfplan"

christmas-tree.my-tree: Creating...

christmas-tree.my-tree: Creation complete after 0s [id=/tmp/tree]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.Detecting & applying changes

What if we change something small, for example the ball_color? Running a terraform plan will result in an update:

christmas-tree.my-tree: Refreshing state... [id=/tmp/tree]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# christmas-tree.my-tree will be updated in-place

~ resource "christmas-tree" "my-tree" {

~ ball_color = "red" -> "blue"

id = "/tmp/tree"

# (3 unchanged attributes hidden)

}

Plan: 0 to add, 1 to change, 0 to destroy.Breaking changes

If we want our Christmas tree at a different location, say /tmp/new-tree instead of /tmp/tree, this will be such a big change that Terraform will not be able to update our existing ressource - instead, it will do a replacement. This will be marked with +/- and will look like this:

$ terraform plan -out tfplan

christmas-tree.my-tree: Refreshing state... [id=/tmp/tree]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

-/+ destroy and then create replacement

Terraform will perform the following actions:

# christmas-tree.my-tree must be replaced

-/+ resource "christmas-tree" "my-tree" {

~ id = "/tmp/tree" -> (known after apply)

~ path = "/tmp/tree" -> "/tmp/new-tree" # forces replacement

# (3 unchanged attributes hidden)

}

Plan: 1 to add, 0 to change, 1 to destroy.Thanks for reading, happy terraforming and merry Christmas!